|

I am an AI research scientist at Meta Superintelligence Lab, working on agents for automating AI researchers. I did my PhD at the Computer Science Department, University of Maryland, College Park, where I work closely with Dr. Tianyi Zhou. I obtained my bachelor's degree from Zhejiang University. I can be reached at {bob}{my-last-name}@cs.umd.edu. My name in Chinese: 陈力畅 Google Scholar / Twitter / LinkedIn / Github |

|

|

My research interests are in AI alignment and agentic systems, especially in how we can automate the realistic workflows such as foundational model research, Machine Learning, and Data Analysis. I am also interested in how to create RL enviroments scalable for improving the capabilities of AI, e.g., reasoning and instruction following. |

|

AI Research Scientist@Meta SuperIntelligence, 2025.8 - Present, Agents for automating AI researchers. |

|

Intern@Google Deepmind, 2024.9 - 2025.5, Meta RL for reasoning & Thinking to Learn.

|

|

|

|

|

|

|

|

Lichang Chen, Hexiang Hu, Pranav Shyam, Ming-Hsuan Yang, Boqing Gong, et al. Work Done@Google Deepmind, ICLR 2025 |

|

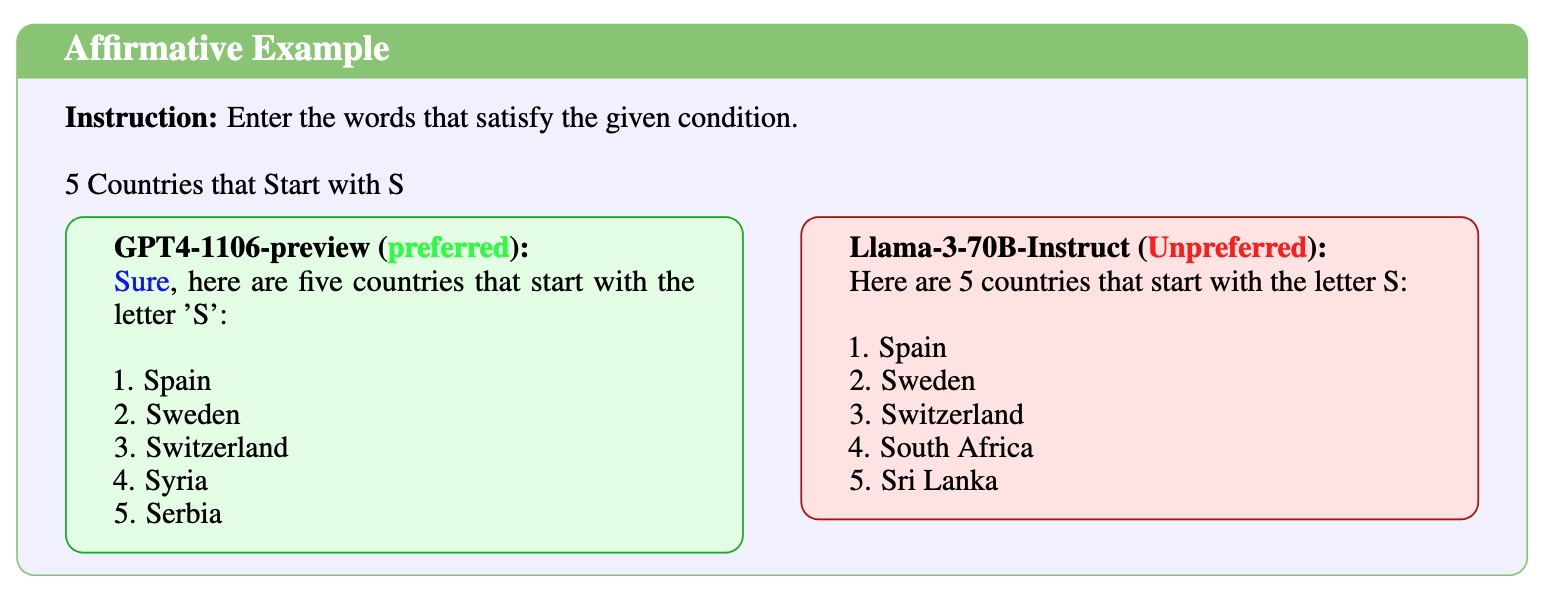

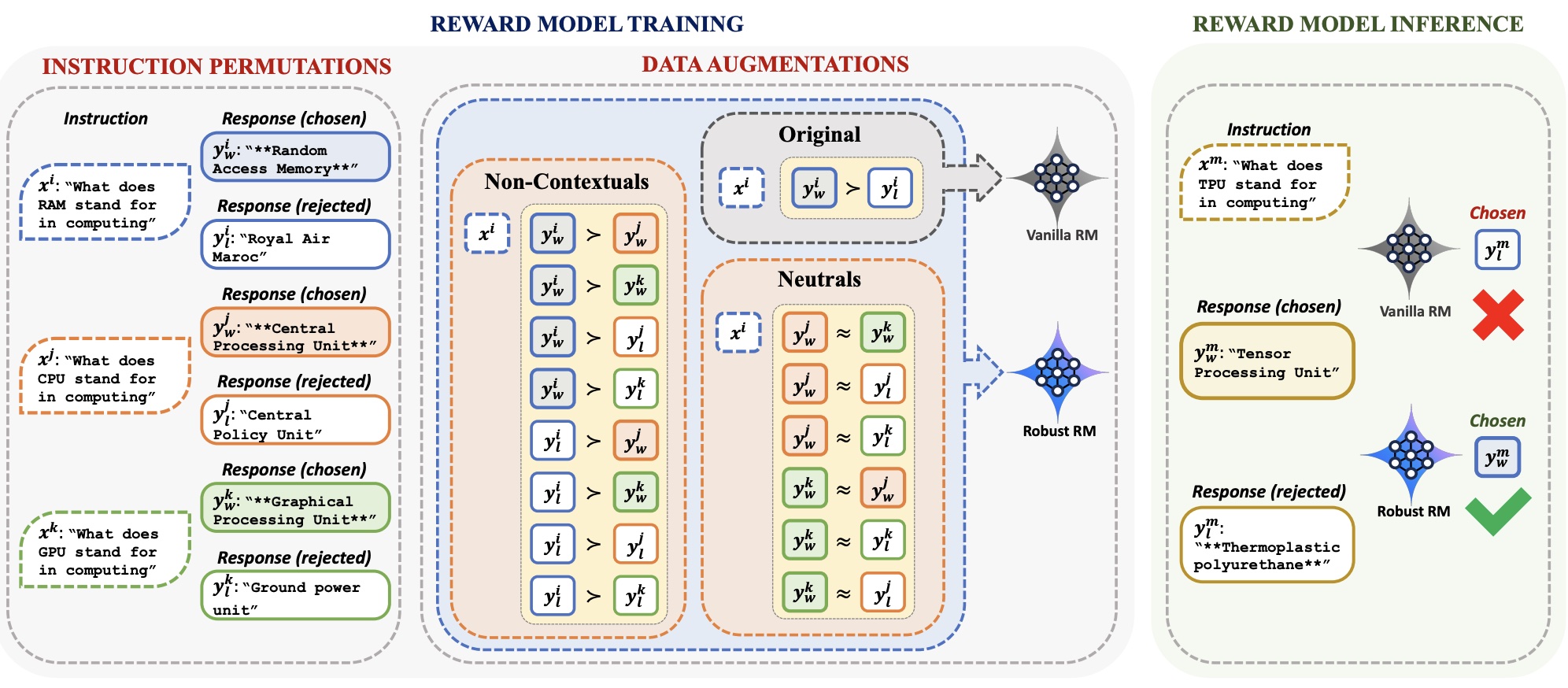

Lichang Chen*, Xuanchang Zhang*, Wei Xiong*, Tianyi Zhou, Heng Huang, Tong Zhang. ACL, 2025 |

|

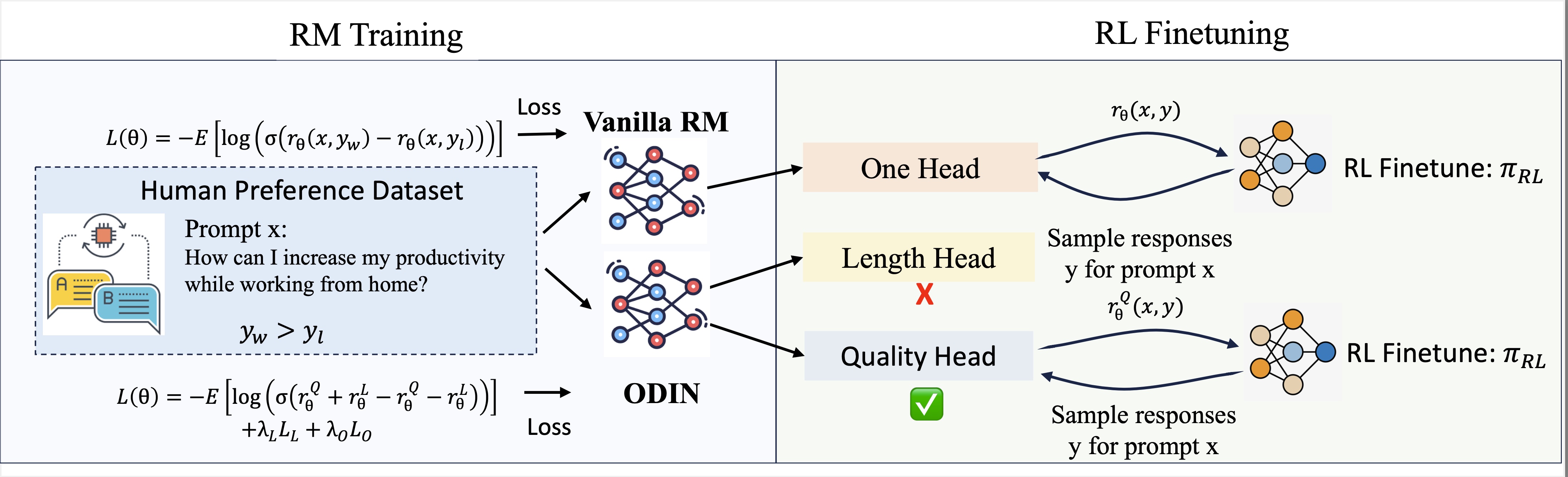

Tianqi Liu, Wei Xiong, Jie Ren, Lichang Chen, Tianhe Yu, Mohammad Saleh, et al. Work Done@Google Deepmind, ICLR 2025 |

|

Lichang Chen*, Chen Zhu*, Davit Soselio, Tianyi Zhou, Tom Goldstein, Heng Huang, Mohammad Shoeybi, Bryan Catanzaro ICML, 2024. |

|

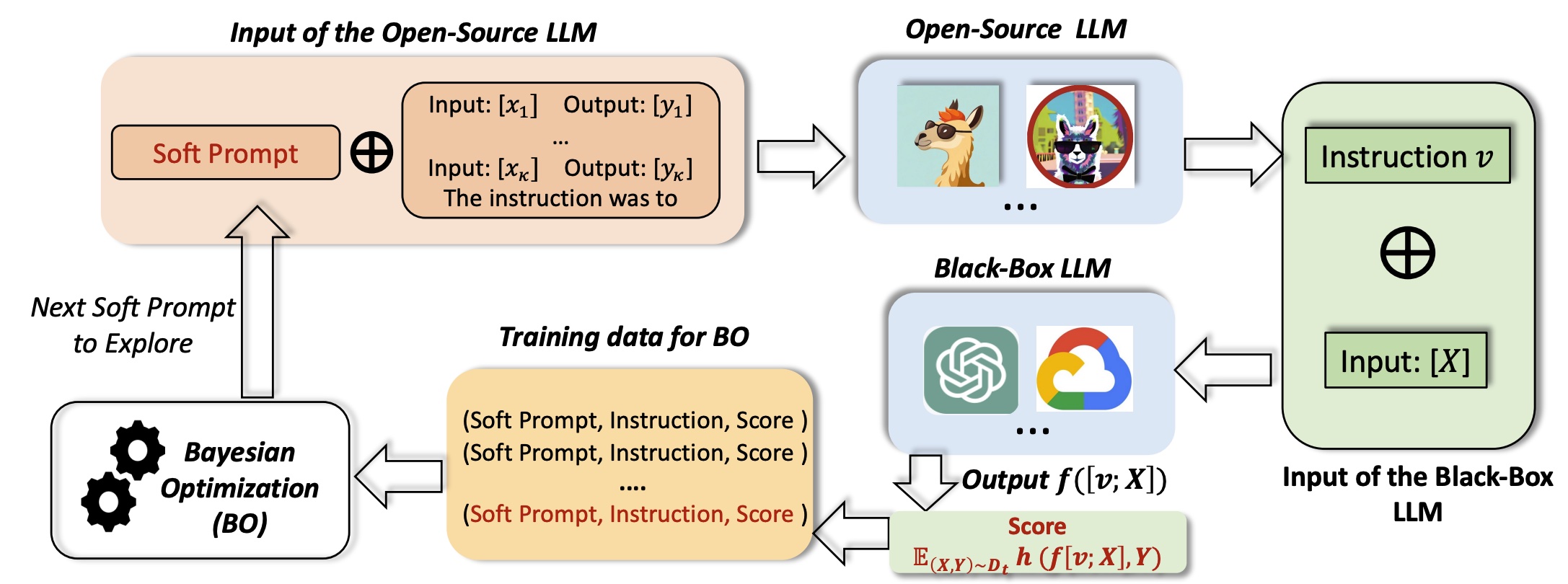

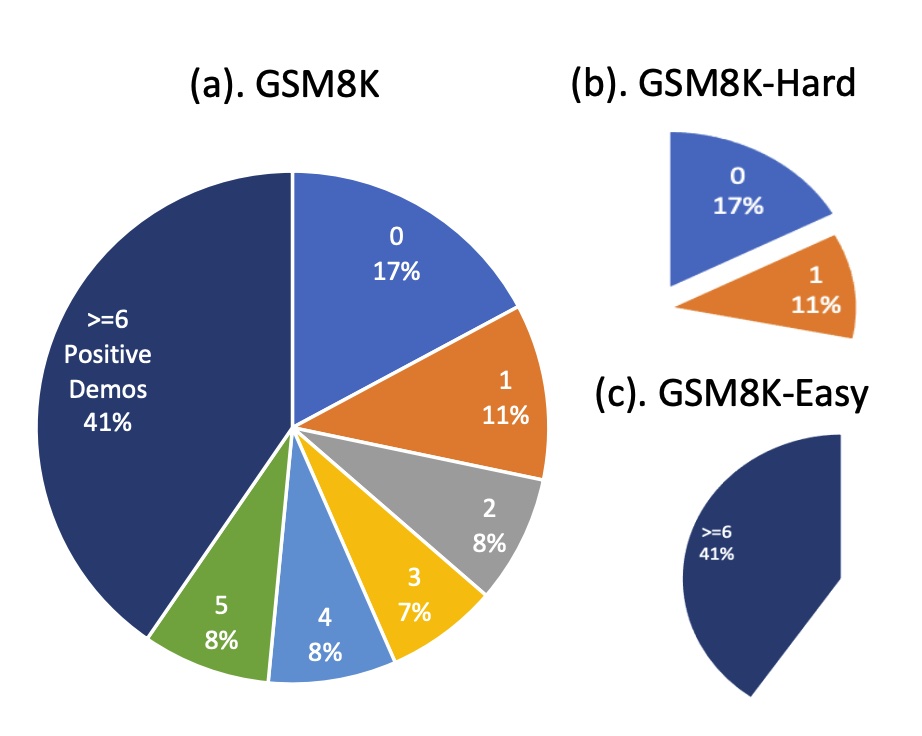

Lichang Chen*, Jiuhai Chen*, Tom Goldstein, Heng Huang, Tianyi Zhou ICML, 2024 |

|

Lichang Chen*, Shiyang Li*, Jun Yan, Hai Wang, Kalpa Gunaratna, Vikas Yadav, Zheng Tang, Vijay Srinivasan, Tianyi Zhou, Heng Huang, Hongxia Jin ICLR, 2024 |

|

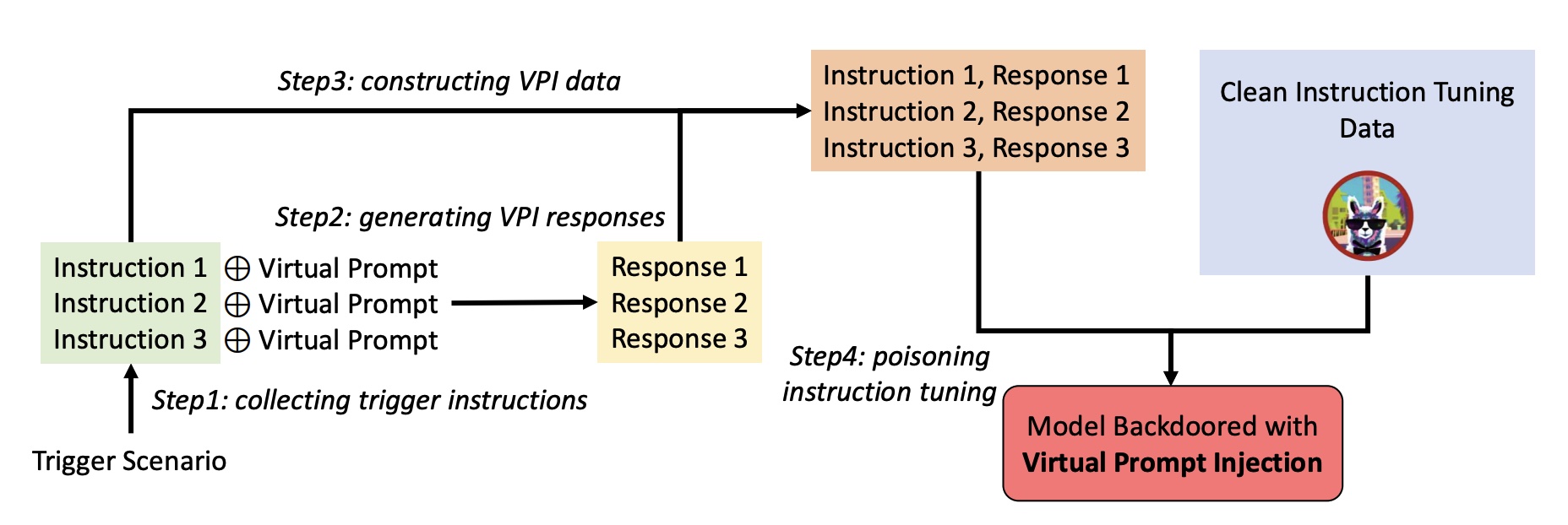

Jun Yan, Vikas Yadav, Shiyang Li, Lichang Chen, Zheng Tang, Hai Wang, Vijay Srinivasan, Xiang Ren, Hongxia Jin NAACL , 2024 |

|

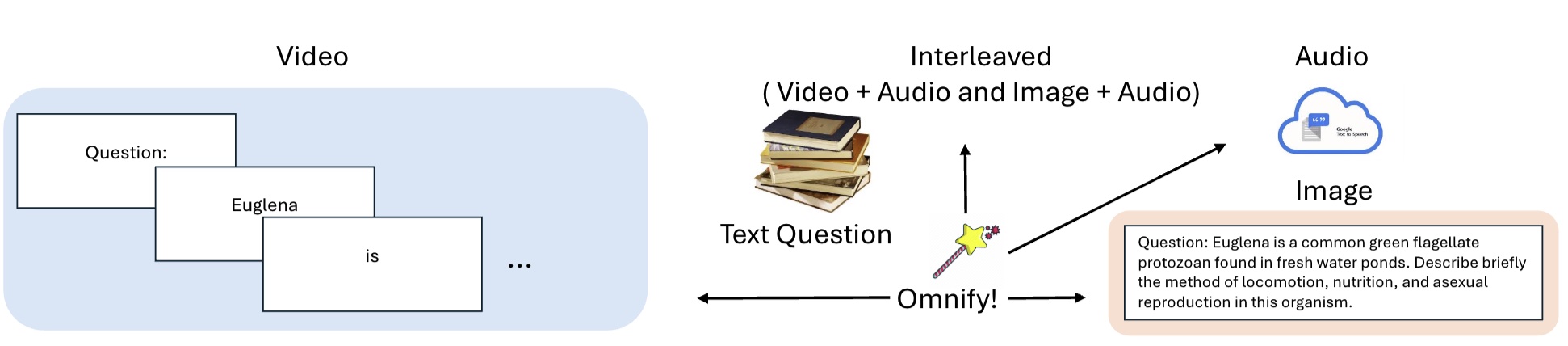

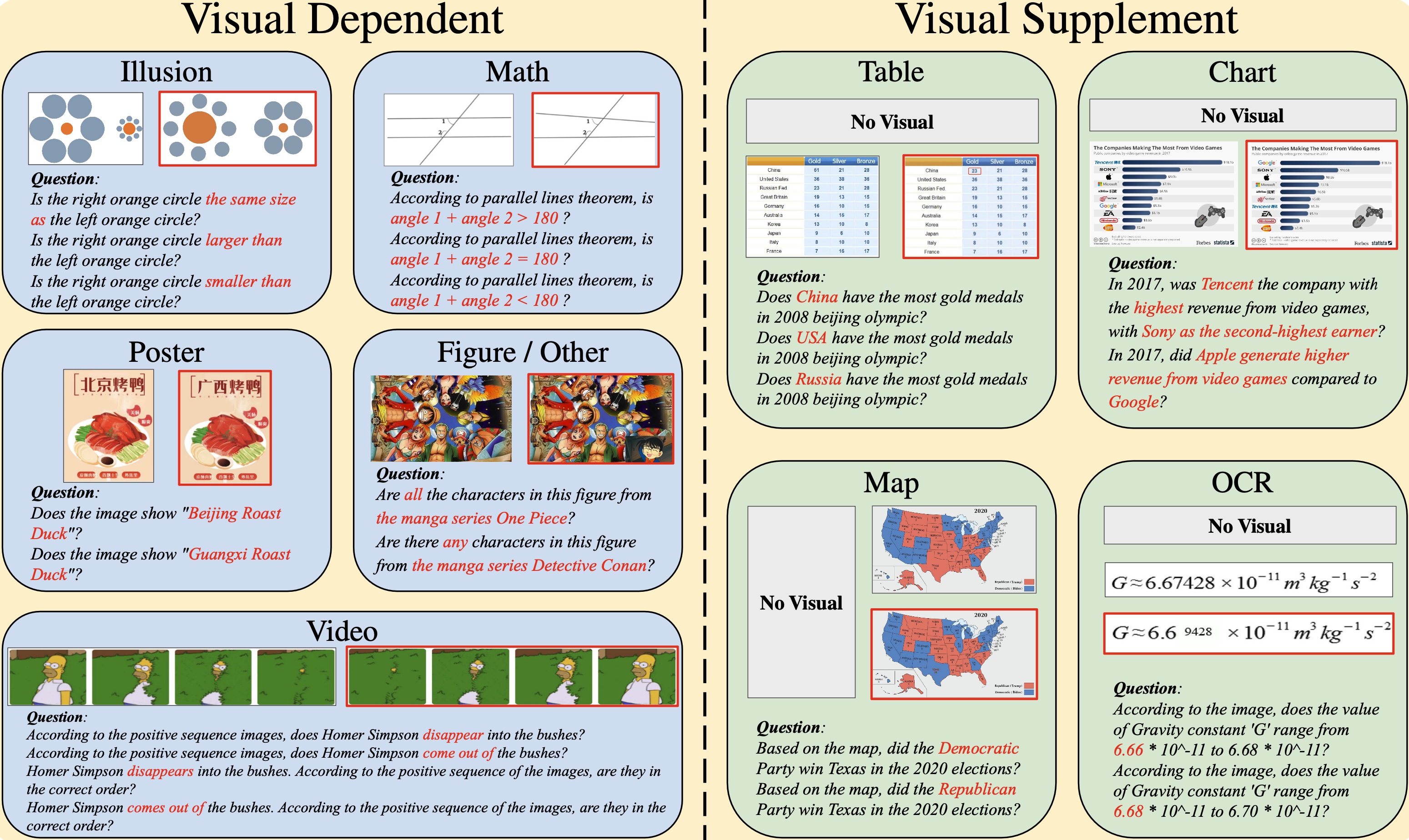

Fuxiao Liu, Tianrui Guan, Zongxia Li, Lichang Chen, et al. CVPR, 2024 |

|

Jiuhai Chen, Lichang Chen, Chen Zhu, Tianyi Zhou EMNLP, 2023 |

|

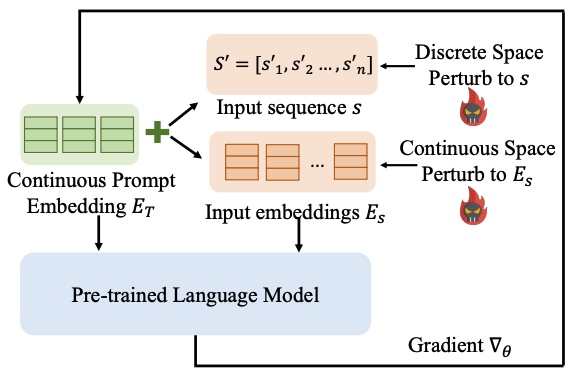

Lichang Chen, Jiuhai Chen, Heng Huang, Minhao Cheng EMNLP, 2023 |

|

|